Ari Rapkin Blenkhorn

Brief Biography

Dr. Ari Rapkin Blenkhorn has been at the Johns Hopkins Applied Physics Lab since 2019.

As a member of the XR Collaboration Center team, she writes software applications,

evaluates new systems and devices, and advises colleagues and clients who are considering

using XR for their projects. She also runs the Applied Physics Lab's annual XR symposium.

Ari's career in computer graphics and XR goes back almost 30 years. Past employers

include Industrial Light & Magic, the US Naval Academy, and several startups including

Perceptive Pixel and two that she co-founded. Her XR-related work includes camera-based

tracking systems, real-time rendering, and simulated experiences for submarine crews,

fighter pilots, and rats.

Ari earned her PhD in computer science from the University of Maryland, Baltimore County (UMBC);

master's degrees in computer science from Carnegie Mellon and the University of Virginia,

where she studied with "Last Lecture" professor Randy Pausch; and a bachelor's degree in

math from Johns Hopkins.

Dissertation Research

GPU-accelerated rendering of atmospheric glories

|

Glories are colorful atmospheric phenomena related to rainbows and coronas. They are commonly seen from aircraft when clouds are present and the sun is on the opposite side of the aircraft from the observer.

I have developed a highly-parallel GPGPU implementation of the Mie scattering equations which accelerates calculation of per-wavelength light scattering. The Mie calculations for each scattering angle and wavelength of light are independent of the calculations for any other, and can be performed simultaneously. My implementation dispatches large groups of these calculations to the GPU to process in parallel. I use a two-dimensional Sobol sequence to sample from (wavelength, scattering angle) space. The 2D Sobol technique ensures that the samples are well-distributed, without large gaps or clumps, thereby reducing the number of scattering calculations needed to achieve visually-acceptable results. The Sobol sampling calculations are also performed in parallel and use a recently-developed technique which precomputes partial results. Overall this work renders atmospheric glories at much faster speeds than previous serial CPU techniques, while maintaining high levels of visual fidelity as measured by both physical and perceptual image error metrics. Additionally, it yields equivalent-quality results with far fewer Mie calculations. The results obtained for glories apply fully or in part to related atmospheric phenomena.

My goal is to produce perceptually-accurate images of atmospheric phenomena at real-time rates for use in games, VR, and other interactive applications.

|

|

Poster presented at SIGGRAPH 2015.

Additional Research

RatCAVE: calibration of a projection virtual reality system

|

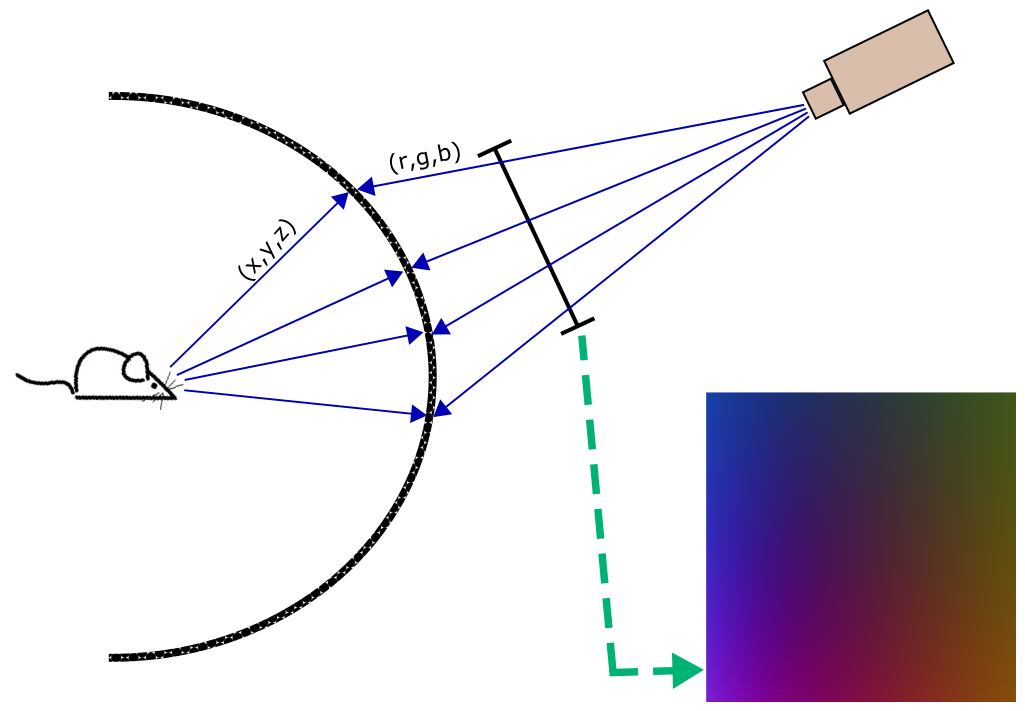

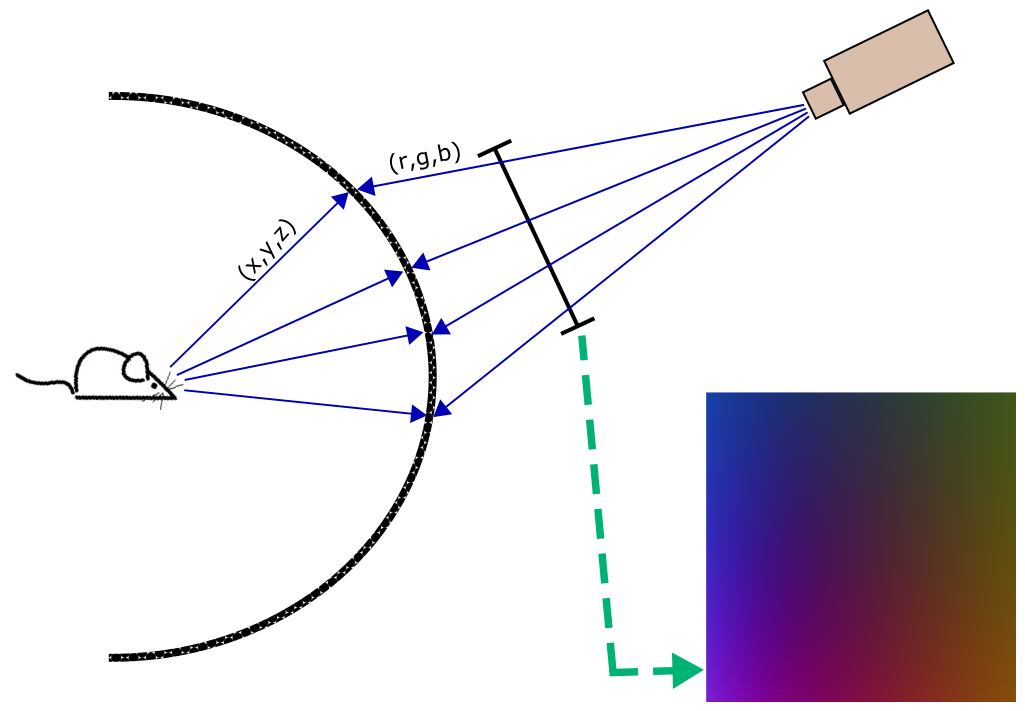

Collaboration between UMBC computer science researchers and Howard Hughes Medical Institute neuroscientists.

We have created a suite of automated tools to calibrate and configure

a projection virtual reality system. Test subjects (rats) explore

an interactive computer-graphics environment presented on a large

curved screen using multiple projectors. The locations and characteristics

of the projectors can vary and the shape of the screen may

be complex.

We reconstruct the 3D geometry of the screen and the location of each projector

using shape-from-motion and structured-light multi-camera

computer vision techniques. We determine which projected pixel

corresponds to a given view direction for the rat and store this information in a warp map for each projector. The projector uses that view direction to look up pixel colors in an animated cubemap. The result is a pre-distorted output image which appears undistorted to the rat's viewpoint when

displayed to the screen.

|

|

Poster presented at SIGGRAPH 2016.

Brief Biography

Education

- Ph.D., University of Maryland, Baltimore County (UMBC),

Department of Computer Science and Electrical Engineering, December 2018.

GPU-accelerated rendering of atmospheric glories.

- M.S. in Computer Science (graphics and animation),

Carnegie Mellon University, December 1997

- M.C.S. in Computer Science (computer vision), University of Virginia, May 1995

- B.A. in Mathematics, Johns Hopkins University, January 1992

Employment Highlights

- Senior Professional Staff, Johns Hopkins Applied Physics Laboratory, 2019-present

- Senior Software Engineer, Perceptive Pixel, 2010-2012

- Founder and Human-Computer Interaction specialist,

Two Lights Technologies, 2007-2010

- Software R+D Engineer, Industrial Light + Magic, 1998-2006

- Adjunct professor, Math Department, US Naval Academy, 2012-2013

- Adjunct professor, Computer Science Department, University of San Francisco, 2005

- Math instructor and program coordinator,

Johns Hopkins University Center for Talented Youth,

1989-1994

- Research Intern, Graphics and Interaction Research lab,

XEROX Palo Alto Research Center (PARC), Palo Alto, CA, 1996

- Research Intern, Lectrice project, DEC Systems Research Center (SRC), Palo Alto, CA, 1995

I have one US Patent, two skydiving world records, and credits on six films.

My Erdos number is 4, and my Bacon number is 3.

If you enjoy knitting, crochet, and other fiber arts, find me on

Ravelry.